Introduction to Distributed Systems

No matter how powerful individual computers become, there are still reasons to harness the power of multiple computational units. As we can make the processors faster to a limit due to the inability of handling heat generated by the excessive flow of electrons.

According to Moore’s Law, the density of transistors on a chip doubles every 18 months for the same cost. However the curve is getting into saturation now-a-days as the transistors in a processor are nearly one atom thick and we can’t go beyond that. Moreover, we have a memory overload i.e. Even if we could develop a processor of 6 GHz, there will still be a bottleneck with the speed of RAM i.e, we will wait the saved time for the data to come up.

And this is where Distributed Systems came into existence. We can’t increase the processing power of a single processor much(also known as vertical scalability) but we can use more computers( also known as horizontal scalability).Distributed Systems can be used where a problem can be reduced to smaller independent problems.

Parallel Computing vs Distributed Computing

I often see people getting confused about parallel computing and distributed computing, but I’m under the impression that there is no clear boundary between the two.

Parallel computing is more tightly coupled to multi-threading, or how to make full use of multiple CPUs in a Single Computer.

On the other hand, Distributed computing refers to the notion of divide and conquer, executing sub-tasks on different machines connected through a network and then merging the results. The computers in a distributed systems are independent and don’t share memory or processor.

Parallelization is easy if processing can be cleanly split into n units. In a parallel computation, we would like to have as many threads as we have processors. eg. a quad-core processor would be able to run 4 threads at the same time.

[IMAGE]

Parallelization Pitfall

While, we can improve the processing performance using parallelization, but still there are some pitfall which needs to be understand.

- How do we assign works units to workers thread?

- What if we have more work units than threads?

- How do we aggregate the results at the end?

- How do we know, all the workers have finished?

The common theme of all the above problems is Communication. Each of these problems represents a point at which multiple threads must communicate with one another or across a shared resource.

So, what’s the problem in using shared resource now?

There are two criteria for correctness in parallel computation environments. The first is that the outcome should always be the same. The second is that the outcome should be the same as if the code was executed in serial.

Problem arises when one process influences another during critical section of a program. Let’s see this with an example. Let’s say we have two threads running parallely having

Initial State: y = 0 ; x = 6

Thread 1

void foo(){

x++;

y=x;

}

Thread 2

void bar(){

y++;

x+=3;

}

What would be final state?

x = 7? 9? 10?

y = 1? 7? 10?

Race Condition!!

Golden Rule: Any memory that can be used by multiple threads mush have an associated synchronization systems.

To enforce the atomicity of critical sections in a program’s code under concurrency , there need to be ways to force processes to either serialize or synchronize with each other at important times. Serialization means that only one process runs at a time – that they temporarily act as if they were being executed in serial.

Protecting Shared State: Synchronization Primitives

All the methods of synchronization and serialization discussed above uses same underlying idea. They uses a variable in a shared state as a signal that all process can access and respect. Mutex and semaphore are the variables that are used to guarantee synchronization. Let’s look at the Toilet Example to clearly understand the difference between the two,

Mutex:

Is a key to a toilet. One person can have the key - occupy the toilet - at the time. When finished, the person gives (frees) the key to the next person in the queue.

Officially: “Mutexes are typically used to serialise access to a section of re-entrant code that cannot be executed concurrently by more than one thread. A mutex object only allows one thread into a controlled section, forcing other threads which attempt to gain access to that section to wait until the first thread has exited from that section.” Ref: Symbian Developer Library

(A mutex is really a semaphore with value 1.)

Semaphore:

Is the number of free identical toilet keys. Example, say we have four toilets with identical locks and keys. The semaphore count - the count of keys - is set to 4 at beginning (all four toilets are free), then the count value is decremented as people are coming in. If all toilets are full, ie. there are no free keys left, the semaphore count is 0. Now, when eq. one person leaves the toilet, semaphore is increased to 1 (one free key), and given to the next person in the queue.

Officially: “A semaphore restricts the number of simultaneous users of a shared resource up to a maximum number. Threads can request access to the resource (decrementing the semaphore), and can signal that they have finished using the resource (incrementing the semaphore).” Ref: Symbian Developer Library

Here, the toilet would be thought up as the critical section.

The Corrected Example

Thread 1

void foo(){

sem.lock();

x++;

y = x;

sem.unlock();

}

Thread 2

void bar(){

sem.lock();

y++;

x+=3;

sem.unlock();

}

Semaphores have prevented both threads from manipulating x and y at the same time. However, they aren’t sufficient to ensure there is only one total flow that can modify x and y. And the way we, do that is through Condition Variable.

Condition variables are objects that act as signals that a condition has been satisfied. They are commonly used to coordinate processes that need to wait for something to happen before continuing. Processes that need the condition to be satisfied can make themselves wait on a condition variable until some other process modifies it to tell them to proceed.

The final Example

Thread 1

void foo(){

sem.lock();

x++;

y = x;

fooDone = true;

sem.unlock();

fooFinishedCV.notify();

}

Thread 2

void bar(){

sem.lock();

if(!fooDone)

fooFinishedCV.wait(sem);

y++;

x+=3;

sem.unlock();

}

Here, wait() makes the thread sleep and notify() wakes up the sleeping thread.

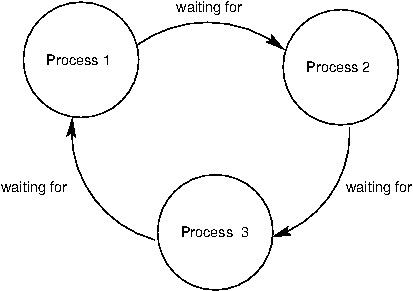

Too much Synchronization? Deadlock!

While synchronization methods are effective for protecting shared state, they come with a catch. Because they cause processes to wait on each other, they are vulnerable to deadlock, a situation in which two or more processes are stuck, waiting for each other to finish.

Synchronization is hard, we need to consider all possible shared state and we must keep locks organised and use them consistently and correctly. It becomes even more complicated when multiple locks can be used.

Every time, a shared state is being modified, we lock it, but this will slow down our program because we would effectively be running as fast as running on one thread any time shared state is entered.

So, we would like to keep our shared state to the minimum and Map Reduce gives us a programming paradigm that eliminates this need for us by doing all the locking under the hood and asking us to phrase our programming problem that eliminates shared state.

For some time, I would be posting about Distributed Systems. If you guys want to go deeper into this field, I strongly recommend taking MIT’s 6.824 course.

Reference:

http://wla.berkeley.edu/~cs61a/fa11/lectures/communication.html

https://www.slideshare.net/tugrulh/google-cluster-computing-and-mapreduce-introduction-to-distributed-system-design